Handling Millions of Requests at Egnyte

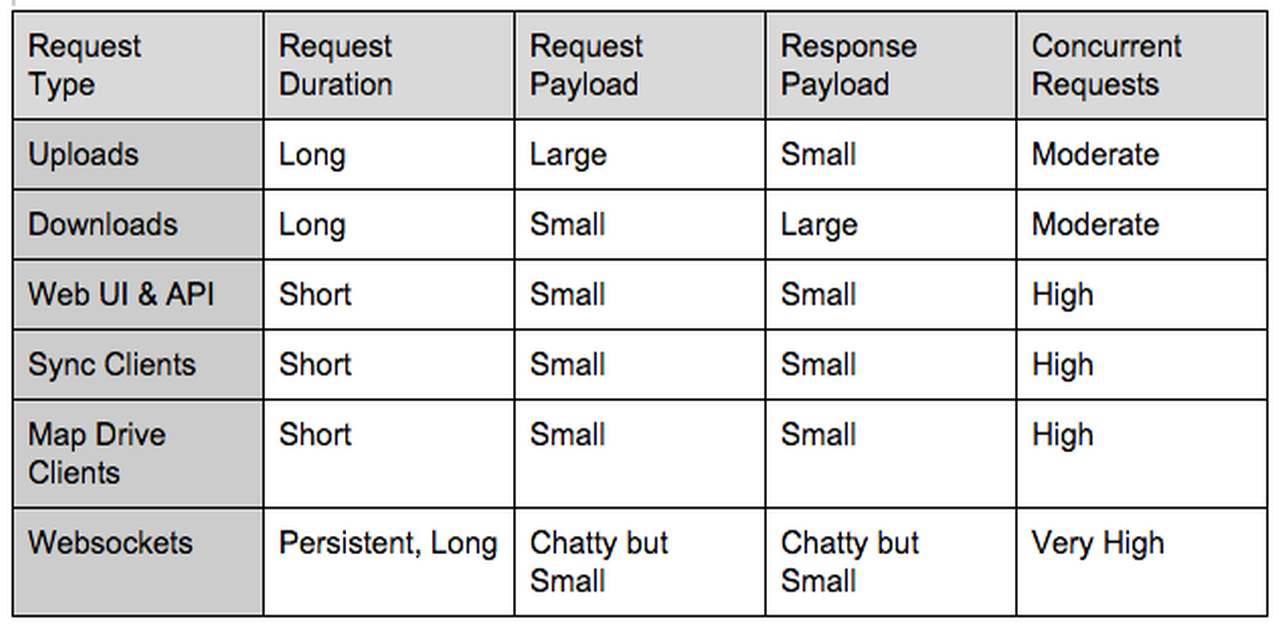

Being a cloud service provider, serving https requests is at the core of our business. Although we support non-http protocols as well, more than 90% of our traffic is over https. Browsers, desktop clients, mobile clients, and our on-premises appliances all communicate over https.We get hundreds of millions of requests every day across data centers. These requests can be classified by different categories based on the following criteria:1) Time taken to complete the request2) Data payload of the request and response3) Priority of the requestOver time, we have learned the best way to protect our infrastructure from abusive requests is to isolate requests based on types. This prevents one request type from exhausting all the resources, causing a complete outage, instead of graceful degradation of a single feature in the application.Based on the nature of the requests, we have classified requests into the following categories:

- Upload and Download: Egnyte supports unlimited file sizes, which means we have users uploading objects as large as a few hundred gigabytes. These connections are long lived and have very large amounts of data flowing through them. It is essential to route uploads and downloads through a dedicated high bandwidth internal network route; otherwise, it can quickly saturate the links and starve other interactive processes such as the Web UI and cause a major outage.

- Websocket: Sync clients and mobile clients use websockets to listen to change events in Egnyte. These connections are chatty and long lived - typically as long as the client’s machine is powered on. The number of websocket connections are also very high - as many as active clients. This enforces a unique requirement for anything that is processing websocket requests; they have to be essentially built on non-blocking I/O and the network links are isolated from the rest.

- Web UI/API: Interactive requests from browsers, mobile devices, etc. are short lived and range from high to very high concurrent requests. These always need to be processed as quickly as possible to provide a seamless user experience.

- Sync Clients: These are Egnyte clients that query the server to sync cloud changes to local desktops. They are like Web UI requests, and we want them to be processed as quickly as possible, but they have very high concurrency driven by the number of active clients.

- Map Drive Clients: Map Drive clients are known to be chatty and granular. We want to make sure only a small subset of resources are assigned to Map Drive clients, and abusive clients making excessive PROPFIND calls are throttled accordingly

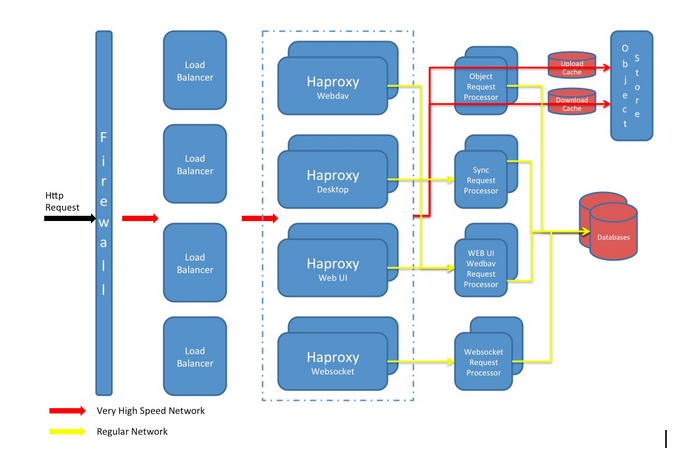

Based on our findings, our request flow is laid out as below:

- Public requests terminate on our firewall

- The firewall forwards it to a number of hardware load balancers. A hardware load balancer is just a TCP load balancer forwarding requests to a bunch of Haproxy servers

- We use haproxy servers to terminate SSL and act as a central router of all requests. Haproxy is a fast and efficient open source load balancer. We found HAProxy can do SSL termination and load balance requests to upstream requests for 10K concurrent requests on standard CentOS four core Linux machines easily. HAProxy is based on poll mechanism, and unlike Apache, can handle very high concurrent long-running connections.

A network is the major part of any high-traffic application, and if you don’t design and dedicate specific bandwidth to specific services, they will quickly saturate the links and bring the entire data center down.We figured that it’s best to isolate the network for uploads and downloads, which are very data intensive, and run them over a dedicated high speed network; thus, as seen in the picture above, our object store network and the links to it all the way from firewall run a dedicated network link.Websockets is another beast that needs special attention, since connections live forever. It’s best to run websockets through their own load balancers and back-end nodes running NIO engines.With the above architecture, we have been able to scale our deployments to our current needs and also provision for the future. Watch this space for a follow up on how we deliver millions of change events to tens of thousands of active clients in near real time.